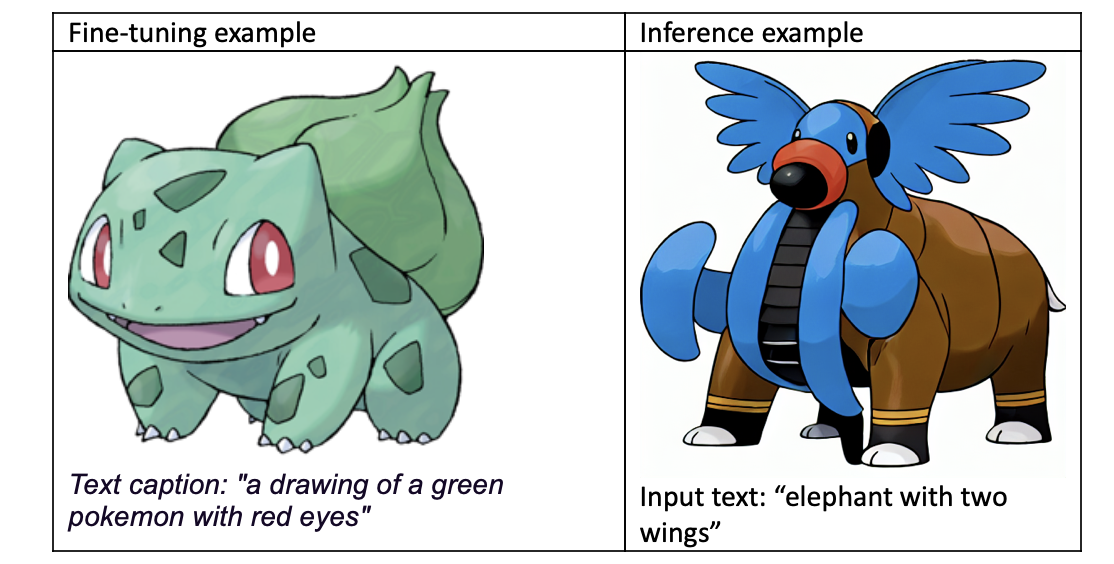

This project aims to develop a Text-To-Pokémon Model by fine-tuning the Stable Diffusion (SD) model. SD is trained on a wide and diverse dataset, fine-tuning continues to train the pre-trained model using a custom dataset with a specific style, i.e., 800+ Justin Pinkneys’ captioned Pokemon dataset here.

Reference Huggingface/Train/Text2image

export MODEL_NAME="CompVis/stable-diffusion-v1-4"

export TRAIN_DIR="path_to_your_dataset"

export OUTPUT_DIR="path_to_save_model"

accelerate launch train_text_to_image.py \

--pretrained_model_name_or_path=$MODEL_NAME \

--train_data_dir=$TRAIN_DIR \

--use_ema \

--resolution=512 --center_crop --random_flip \

--train_batch_size=1 \

--gradient_accumulation_steps=4 \

--gradient_checkpointing \

--mixed_precision="fp16" \

--max_train_steps=15000 \

--learning_rate=1e-05 \

--max_grad_norm=1 \

--lr_scheduler="constant" --lr_warmup_steps=0 \

--output_dir=${OUTPUT_DIR}

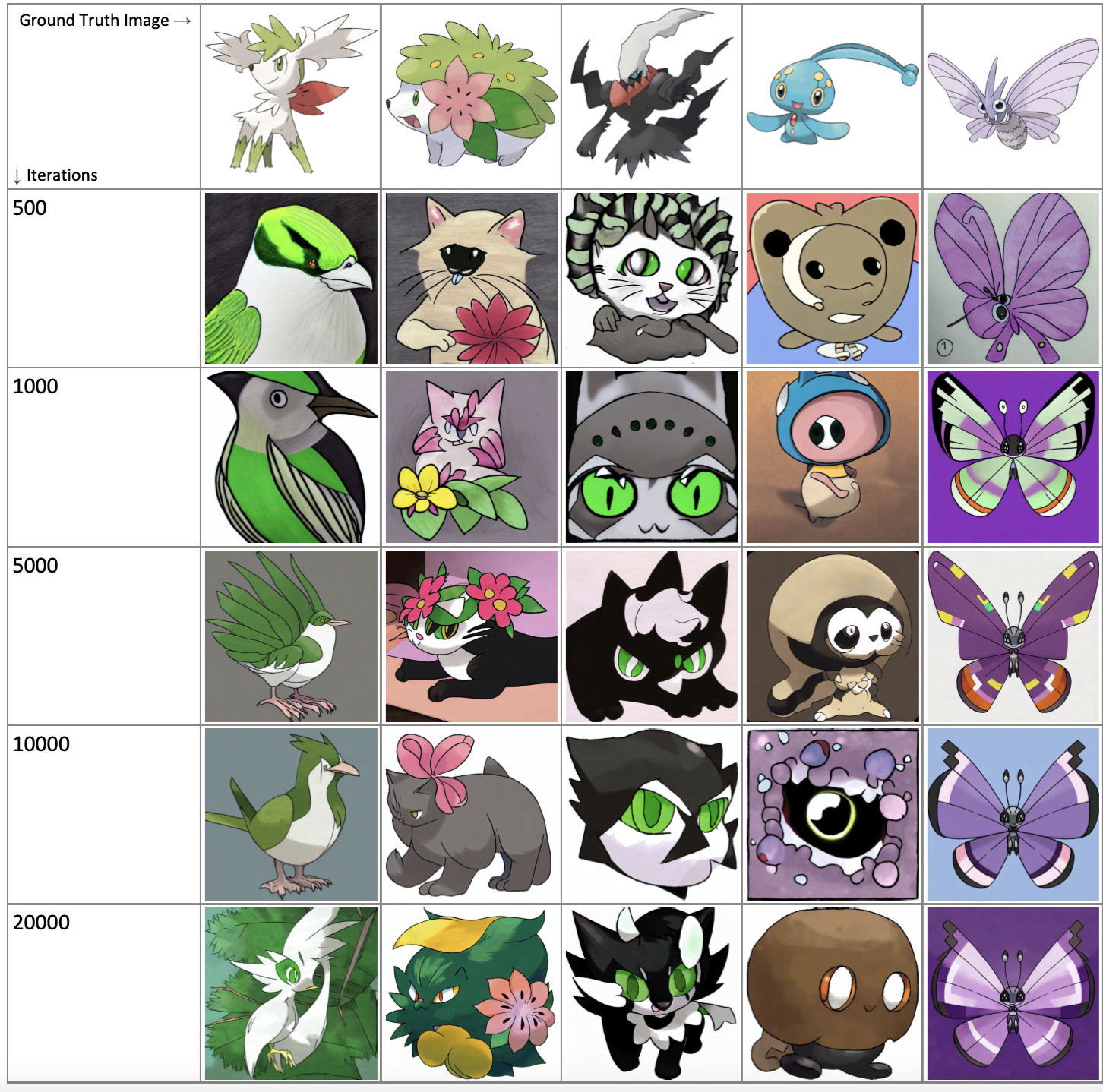

Training and Testing Samples

Generated Images Along Training

If we compare images in 500 iterations and 20000 iterations, we can see flaws like irregular face and twisted eyes in former ones, the style and quality of latter ones is indeed closer to the grand truth Pokémon images in the first row. The quality of generated images is increasing apparently.