In this project, we implement the FaaS management policy proposed by the paper Serverless in the Wild: Characterizing and Optimizing the Serverless Workload at a Large Cloud Provider and simulate the cloud environment for evaluating the policy performance. The simulation is based on Azure 2019 invocation data. The project report is here.

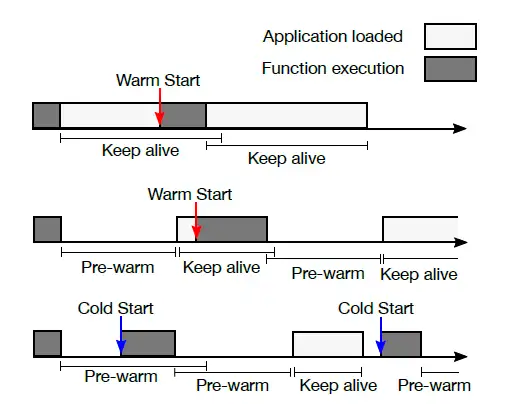

The key idea of the policy is to unload the application code from the memory for a pre-warm window of time and load the code again for a keep-alive window of time for the next invocation. When the application code is already in the memory, its functions can be launched quickly, which is called a warm start. On the other hand, in the cold start, it takes more time to access the code in the persistent storage and start the function. However, keeping the application code in the memory at all times can be prohibitively expensive, especially for short and infrequent applications. Therefore, this project aims to make a balanced trade-off between performance and resource cost (wasted memory time), i.e., minimizing the number of cold starts while maintaining the low level of memory waste.

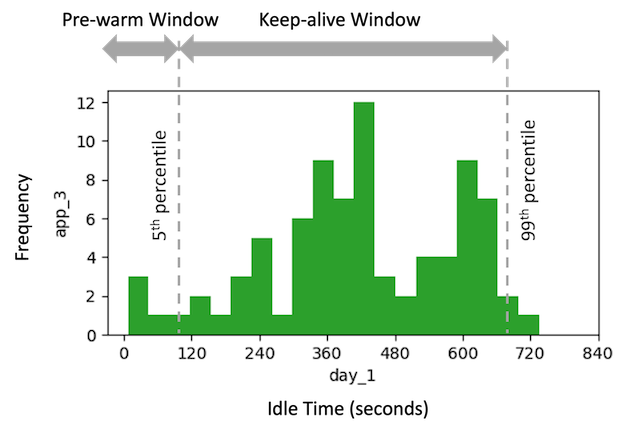

Proposed policy determines the pre-warm window and keep-alive window based on the histogram of application idle time (IT). The pre-warm window is set equal to the 5 percentile of app ITs and the keep-alive window equals to the 99 percentile minus 5 percentile. By doing that, the app code is unloaded from the memory during the pre-warm window and loaded during the keep-alive window where most of app executions have already started, so that the warm start occasion is maximized.